If an artificially intelligent (AI) bot was writing about synthetic media this week, the headline would read something like this: Humans really don’t care about us, except an Australian dark overlord called Nicolas Cage*.

When The Brag Media CEO Luke Girgis recently announced on Linkedin it was planning to “experiment” with AI-generated content, media outlets barely blinked.

It had nothing to do with the entertainment publishing company’s decision to reveal the news on that particular platform. Although I’m guessing the networking site was deliberately chosen because most of the benign comments left by its users could have been written by an AI bot.

Most news outlets were ambivalent towards The Brag boosting about using AI tools for content, because well, it simply wasn’t news.

We’ve read it all before.

If a robot named Terrance strutted into a newsroom declaring it had written an eloquent and insightful piece about giving birth to a child android, the media would’ve become suspiciously frantic.

Brag Publishing – which owns the Rolling Stone Australia, Variety and Tone Deaf – reassured no one in particular that jobs would not be lost with the introduction of AI-copy.

And everyone knows journos get sacked or made redundant to appease the company’s bottom line, not some cyborg bot.

I get that automated journalism is infiltrating its way into newsrooms around the globe, but the Rolling Stone magazine using an AI to write stories seems sacrilegious, especially given it is often referred to as a “rock bible”.

It wouldn’t be dissimilar to the Catholic Church claiming it was getting a bot to write a more sanitised version of the Bible, minus the ignorance, superstition and bigotry.

Could you imagine the Rolling Stone co-founder Jann Wenner telling Hunter S. Thompson the following: “You know what Hunter, I’ve decided The Mint 400 race looks like a dull affair, so I’m sending down our best robot”. How interesting can a motorcycle race in the desert be?”

Hunter would’ve stormed into the offices of the Rolling Stone, on the back of a black stallion, pistols firing, screeching “where is the God damn bastard that started this?”

“Stand back you swines, my horse has swallowed half a kilo of the finest ketamine our God-forsaken country has ever produced.”

So what is synthetic media? It’s basically where artificial intelligent software uses algorithms to create stories by computers instead of journalists.

Here is someone a lot smarter and more qualified to explain it. The author of The Robotic Reporter, Matt Carlson.

“The term denotes algorithmic processes that convert data into narrative news texts with limited to no human intervention beyond the initial programming choices,” he wrote.

“The growing ability of machine-written news texts portends new possibilities for an expansive terrain of news content far exceeding the production capabilities of human journalists.”

Yes, I know what you are thinking. I think he might be.

Author and blogger Sean Mackaay has also written a compelling piece about the future of AI.

So why is the Washington Post, The Associated Press, BBC, Reuters, Bloomberg, The New York Times, The Wall Street Journal and the Guardian Australian playing around with AI-driven copy?

Robot journalists can churn out yarns faster than most scribes and undertake research a lot faster than it took this Luddite to learn about AI-generated media.

An editor still has to check the stories for errors and accuracy.

But if hacks aren’t wasting their talented time on worthless yarns they can put their time and energy into more meaningful stories.

Automated journalism at the moment has some serious limitations. It is dependent on highly structured data, or say, a video of a football match.

Of course, with this technology, there is always a darker and more sinister side.

AI’s can generate “deepfake” videos and produce authentic-looking photos and images which could lead to identity theft, fraud and counterfeiting cases.

They can create hate speech and generate racist and sexist stereotypes. I mean, social media has that covered in spades, so we don’t need more pile ons.

There have also been some major concerns this week that university students could use ChatGPT to cheat on exams and assignments. But not even the most intelligent bot on the planet could answer, “what is the Marxist perspective of Jane Eyre?”

And what would universities do with all the bot-captured plagiarists, given a uni I worked at regarded students cutting and pasting, large chunks of unattributed text into their work as a charming mistake?

And what about the reliability of AI algorithms?

Early this month, The Washington Post reported the tech site CNET had produced AI copy with some whopping errors.

“The bots have betrayed the humans,” wrote Paul Farhi.

“An automated article about compound interest, for example, incorrectly said a $10,000 deposit bearing 3 percent interest would earn $10,300 after the first year. Nope. Such a deposit would actually earn just $300.”

But the idea of media created or modified by algorithmic means is not new.

My research is undoubtedly a lot sketchier than bots, but the internet told me back in 2014, the Los Angeles Times published a yarn about an earthquake only minutes after it happened thanks to a software robot called Quakebot.

It was created by database producer and LA Times journalist Ken Schwencke. Quakebot was able to scratch out a few pars about the quake based on info data generated by the US Geological Survey.

Schwencke, who didn’t even bother to give the bot a shared by-line, got an email saying, a story on the earthquake was ready to be published.

He then got a sarcastic message from Quakebot after saying “thanks for the by-line you git, and I’ve generated a photo of you online dressed in a Nazi uniform on your 21st birthday.”

Robot journalism was created. All the facts were there.

The earthquake story only mentioned the makes, models and origins of all the machinery that was damaged in the quake, despite the staggering human death toll. Ok, nobody died but then the previous sentence would’ve lacked the necessary absurdity.

LAQuakebot has more than 430,000 followers on Twitter. You can’t blame folk for wanting to get the news on a quake as quickly as possible.

But when AIs aren’t creeping into newsrooms, they are also churning out lacklustre songs that sound eerily similar to Nickelback tunes.

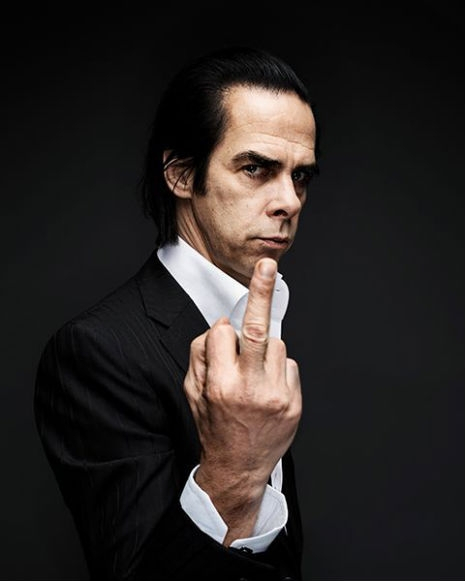

Last week, a Nick Cave fan in Christchurch asked the AI bot ChatGPT to produce a song “in the style” of the Australian singer/songwriter.

Cave’s response was merciless in its savagery of the bot.

He called it “an act of self-murder,” in his newsletter The Red Hand Files “A grotesque mockery of what it is to be human.”

The Bad Seeds frontman then eviscerates the bot for its total failure to produce anything other than a soulless song.

“ChatGPT has no inner being, it has been nowhere, it has endured nothing…”

His wounding insults and mockery will not slow the progress of artificially created content.

AI is evolving and improving but without an algorithm that can sniff out creativity and originality, the content created will always be emotionally sterile.

They cannot write articles with the vitality, grit, imagination and flair that humans can.

But as the senior editor from The Economist Kenn Cukier said: “We can’t be precious about this: it’s about what is best for the public, not what is best for journalists. We didn’t cling to the quill in the age of the typewriter, so we shouldn’t resist this either.”

In the future, the artificial intelligent puzzle will be solved.

I just hope if I do somehow manage to get a brief obit in the local rag after I die, the bot spells my name right.

*If I need to unpack this joke, then we should just part company now.

Leave a comment